Social Engineering and AI-Generated Phishing Messages

Dave Piscitello

Social engineering is an attempt to influence or persuade an individual to take an action.

Some social engineering has public benefits; for example, a center for disease control runs an advertisement to encourage you to get a free influenza or Covid vaccine and in so doing, they may explain how you will protect yourself as well as elderly or other vulnerable populations. Some social engineering – a pharmaceutical company advertisement that warns you of the miseries of shingles and promotes its new vaccine - has public benefits, but there’s a financial incentive for the company, too.

There’s a not-so-subtle difference between these two forms of persuasion. The first tries to influence you to do something positive for yourself and others. The second uses a negative emotion to compel you to rush off and get vaccinated before another minute passes.

Emotion-driven impulsivity?

Cybercriminal social engineering typically plays on emotion - positive or negative - to cause an individual to act in haste (emotion-driven impulsivity). I’m sure that you’re familiar with these types of emails or texts that attempt to exploit emotion-driven impulsivity:

Fear, uncertainty. Your credit card has been suspended due to suspicious activity, you’ve failed to pay a highway toll, your checking account is overdrawn, you are delinquent in taxes, or your computer has a virus and requires immediate tech support.

Greed. You’re offered a large sum of money if you pay a fee in advance to assist with the transfer of an estate.

Excitement. You’ve won a prize, an auction, or a lottery.

Compassion. A friend or acquaintance – or astronaut - has lost their wallet or passport while traveling. They are stranded and need cash to return home. Or, very commonly, your grandchild has been arrested or kidnapped and needs bail or ransom money.

These are all phishing bait, and some - stranded traveler, advance fee fraud - have been around for so long that they are archetypes.

The first goal of phishing is to create a convincing scenario that will compel someone to take the bait - visit a link, reply to a text or mail, or call a telephone number. Those texts or email links are the hooks that draw someone to a fake web site. At the fake page, the second and ultimate goal again is to create a convincing deception, and if the deception succeeds, to steal something of value, e.g., credit card data, user account credentials, personal information.

AI-Generated Phishing Messages

The most adept criminals make very convincing impersonations of legitimate correspondence. But even novice cybercriminals can use AI to make phishing messages that are nearly indistinguishable from legitimate correspondence. Mike Wright has put a nice post together, How to Spot AI Generated Phishing Emails, where he offers 10 indicators (red flags) that can help you tell if an email message is an AI-generated phish.

I’m not sold on the claim that “AI will phish better than humans”. I still wonder how AI create a better deception than an attacker that clones the actual correspondence and web pages of a brand they intend to impersonate. But cloned message or AI-generated, remember that:

No matter how convincing a phishing email appears, the phisher must use their domain names to send you mail and to lure you to their fake site with a URL.

If there is one constant in the phishing universe it’s that the phisher has to draw you to his fake site before they can exploit you… and they’ll need a domain, URL (or mobile number) to do that.

My advice? Assume a message is a potential phish and check names and numbers. For email:

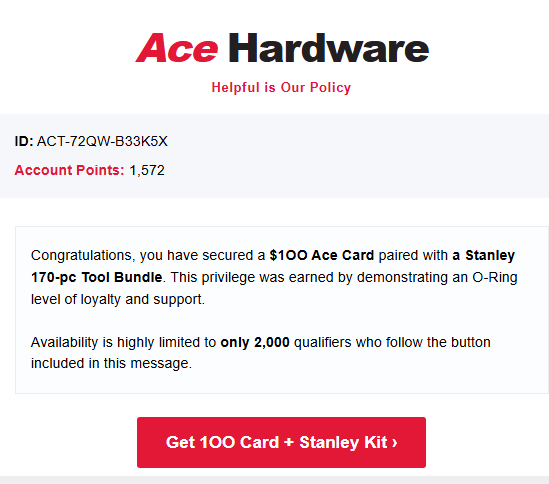

Check the “From:” address carefully to see if the domain name is one that you trust or matches the legitimate organization. This email is a convincing bait:

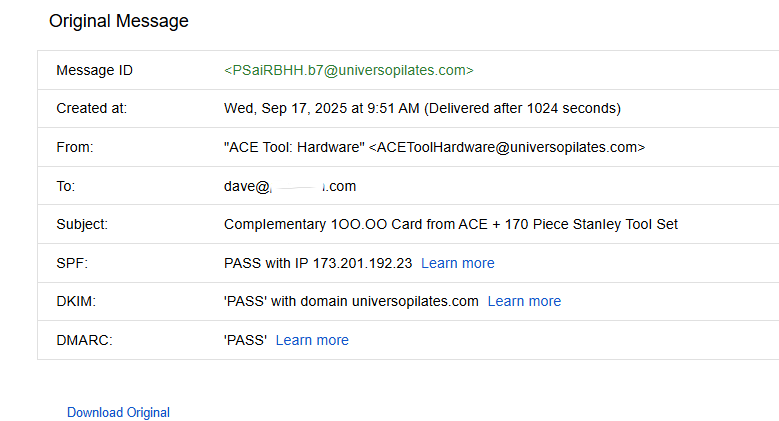

But the domain of the sender’s email address is suspicious, unrelated to Ace Hardware, so go no further.

If you decide to trust the sender, next examine or hover over all the buttons, images, linked text and URLs in the message to see if any of these appear suspicious. If you could hover over the button below, you’ll see a defanged version of the phisher’s URL embedded in the button:

This is the same domain as the email sender, and there’s no apparent connection to Ace Hardware (or acehardware.com).

Thanks, and please share any advice or anecdotes about your AI-generated phishing experiences with us.

Past Insights posts have discussed deceptive URL composition. The examples we share in these posts were taken from the wild.

Exploiting well known TLD strings in domain names https://interisle.substack.com/publish/posts/detail/169558998

Common Visual Deceptions in Phishing URL Composition

https://interisle.substack.com/p/common-visual-deceptions-in-phishing?r=59cehk

Phishing in the 2020s: Brand Used in Phishing Attacks

https://interisle.substack.com/p/phishing-in-the-2020s-brand-used?r=59cehk

I agree, always assume the worst, it sucks but it is the way to go especially with technology. I’m really worried for the older generation, I know they are taking advantage of them.